Imagine having 15.000+ MAC addresses in a Cisco ISE database. All these MAC addresses are used to gain access to wireless networks protected with WPA2-PSK and MAC-filtering. But how to make sure that they are all (still) valid?

Remove MAC Addresses After Change In Authentication

Finally, the time has come to implement 802.1x on the wireless network for a substantial amount of these devices. These devices are consist mainly of Windows machines or Thin Clients. Both of those are managed through either the Microsoft Active Directory or a Thin Client Management Suite. So, applying setting related to 802.1x are pretty straight forward to distribute. There are however some Windows / Thin client devices that will remain on the MAC-filtering wifi networks for numerous reasons.

After a few tests the migration of the new 802.1x devices has started, but is leaving us with a MAC Address database filled with addresses that can be removed, since they are no longer used…. But how to do that? Cisco ISE has a lot of features, and is capable of generating rich reports about almost everything. However it has no way of reporting on dot1x devices that might still remain in the MAC address database as well. That is where I had to become creative.

First I explored the Cisco ISE Monitoring API, but that only gives active connections. There’s no way of exploring past (successful) authentications/authorizations. I needed a way to get current and past successful dot1x authentications and compare the MAC addresses associated with those entries to the MAC address database, and remove those from that database.

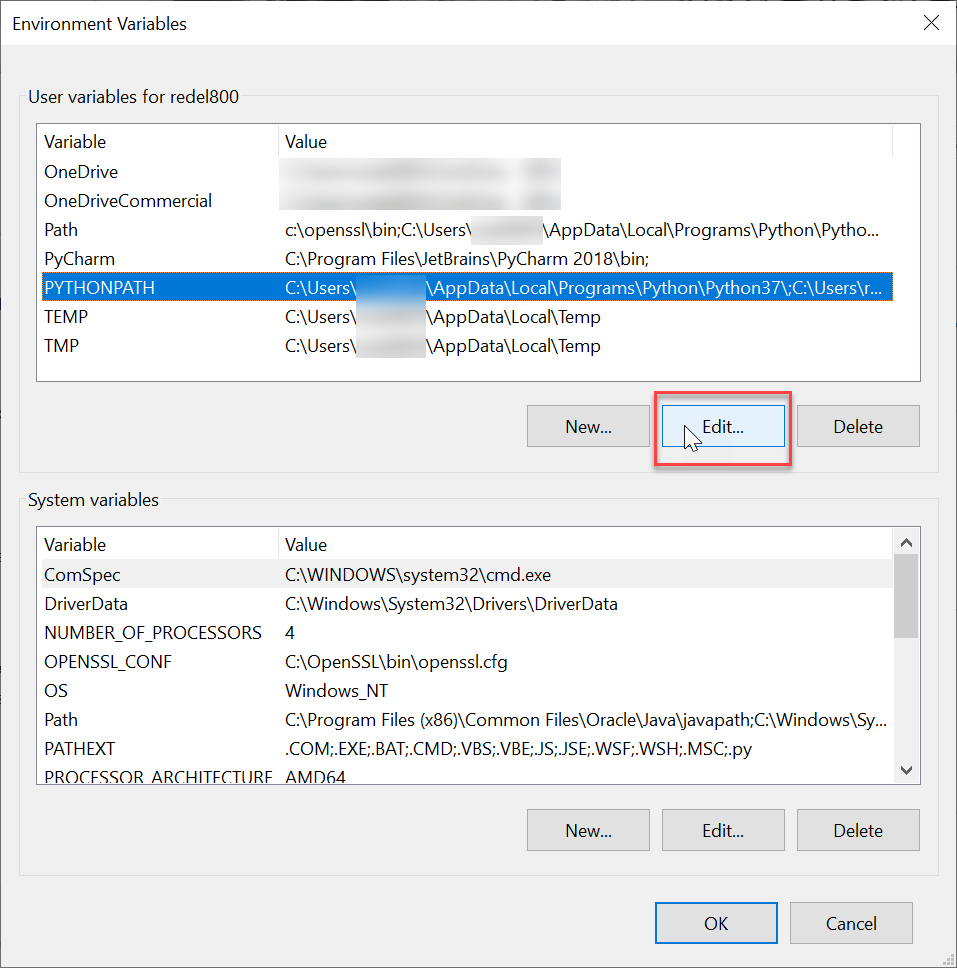

Eventually, I found two paths to accomplish this; First through the reporting module. There you can export all RADIUS authentications to CSV. Filtering these results in Excel, or through Python scripting, you are able to extract the MAC Addresses that successfully authenticated with dot1x. Feed these MAC addresses to a script and remove them through the Cisco ISE ERS API. Or if you’ve got nothing else to do; do it by hand.

The other path is by following the syslog output and parsing that feed. The downside to this is that you have to have syslog file access or add an additional syslog server to Cisco ISE that you may access (e.g. your scripting machine). The syslog version makes a a bit more tricky, since the (syslog)log lines are very long and you have to combine the correct lines to get the full message. Parsing CSV is much easier, so I followed that path first.

Dormant/Obsolete MAC Addresses

Another issue with static MAC addresses (and even local accounts) is that they tend to remain indefinitely in the MAC database. Lang after devices have been decommissioned, the MAC address remains. Which leaves a security hole to be exploited.

By using the generated ‘RADIUS Authentications’ reports over a longer time (e.g. 90 days) you can do a cross reference with MAC addresses in the database and recent successful authentications of that MAC address.

There are some caveats though;

you need a session-timeout on the network (either statically defined on the network device) or by RADIUS return attribute, so that devices have to re-authenticate periodically. Otherwise you might not see a valid device in the logging and removed it by mistake.

RADIUS Reporting goes only 30 days back, so you have to combine several (scheduled) reports to achieve a longer time span. There used to be a custom time frame option, but seems to have disappeared in version 2.6